AI Companies: Publish Your Whistleblowing Policies

Blueprint joins open call to AI leaders to establish and publicise dedicated, protected whistleblowing channels

Advanced AI presents a number of different risks, ranging from the catastrophic risks of systems running out of human control, to product safety and the possibility of unfairness or inequitable outcomes for those who might be obliged to interact with these systems. There is no question that we need whistleblowers in this sector.

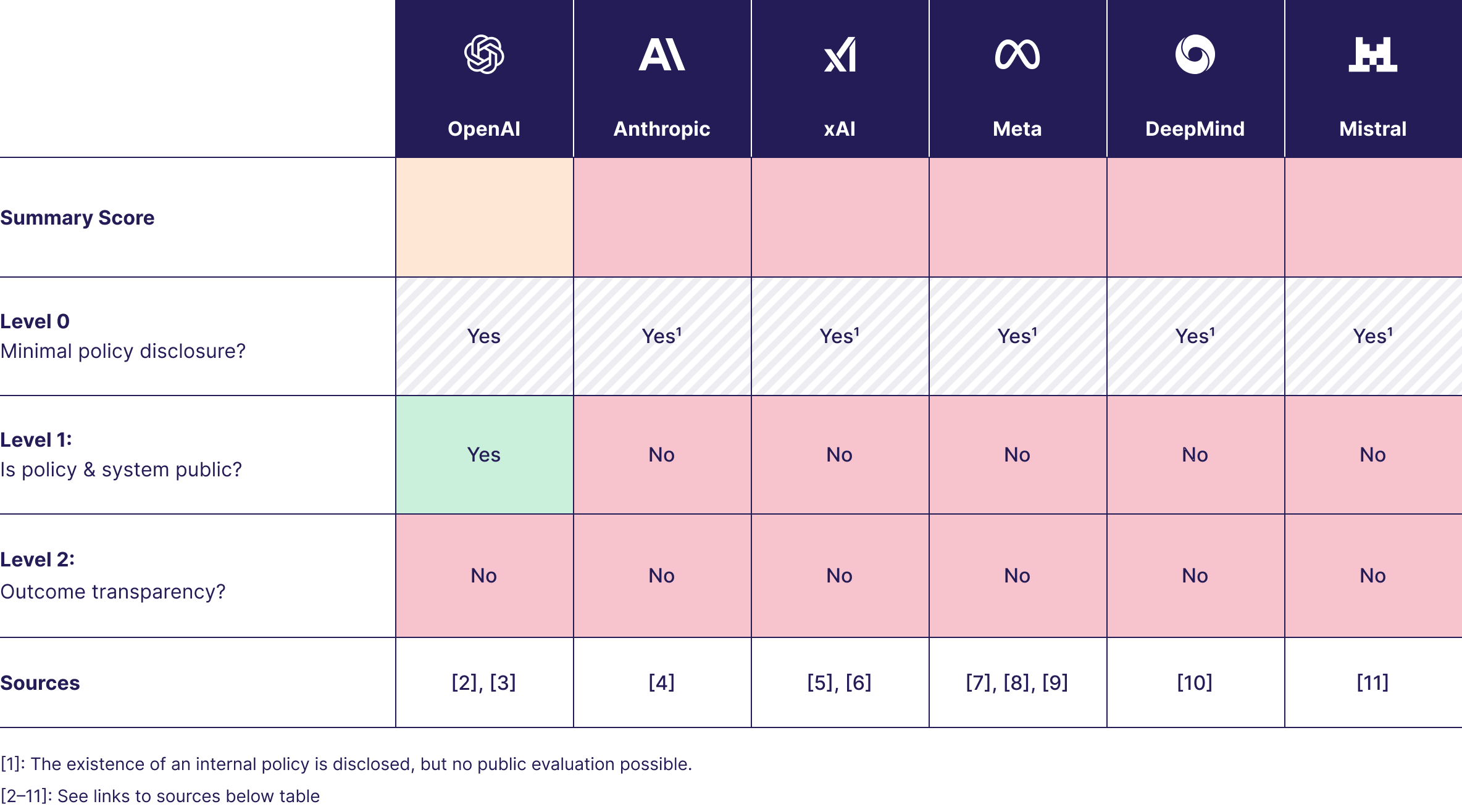

Despite this, the major AI labs’ commitment to facilitating internal whistleblower disclosures has been lacking. Therefore we are proud to join a campaign urging AI companies to make their whistleblowing policies public. Though a number of the major AI labs claim to have whistleblowing policies, so far only OpenAI have made their policy publicly available.

The open letter, organised by the AI Whistleblowing Initiative is signed by a number of whistleblowing, human rights and AI-focused organisations, plus a number of prominent individuals. These include Stuart Russell - often referred to as one of the ‘godfathers’ of AI and Lawrence Lessig.

The letter reads:

AI companies should make their whistleblowing system’s effectiveness and trustworthiness transparent to the public. The first, baseline, step is to make their whistleblowing policies public. This would benefit the public, potential whistleblowers, policy makers, and even the companies themselves

A policy on its own is a good start towards AI labs recognising whistleblowers, but the open letter also calls for outcome transparency - such as an annual report detailing the number of disclosures received or investigated.

Internal channels, which employees within the major AI labs can take advantage of to express their concerns, are essential but they are only part of the puzzle. While internal whistleblowing channels are ineffective - or where a whistleblower has good reason to believe that they may not work properly - external channels such as regulators are necessary.

While several jurisdictions have set up their own bodies to supervise AI development, including the EU’s AI Office and the UK AISA, to this date none have set up a dropbox for whistleblowing disclosures.